Making lens surgery benefits experienceable.

Our joint projects are characterized by a strong willingness to innovate and corresponding innovative drive—on the customer side and on our side. The open mindedness to test technologies that have never been used before to create insightful experiences enables collaborative research and learning, which results in genuinely new customer experiences and product innovations.

Both sides know that no layperson will understand the complex processes in the eye by merely reading a dry explanatory text. Instead, patients need to see it with their own eyes, and experience interactively and playfully how 1stQ's lenses affect their vision—in the real and virtual world, with the appropriate technologies, as easily understandable and intuitively comprehensible as possible.

💡 We will illuminate how we iteratively implemented this task—always in close technical and conceptional coordination with 1stQ—in this use-case spotlight series.

Our task:

🎬 First joint interactive web application: Optimal Natural Vision (ONS) & cataracts

Our solution:

During our collaboration, we tested various didactic methods to make intraocular lenses experienceable for diverse target groups. The first web application presents an interactive story and engages viewers on an emotional and intellectual level with impressive panoramic views and atmospheric cello music.

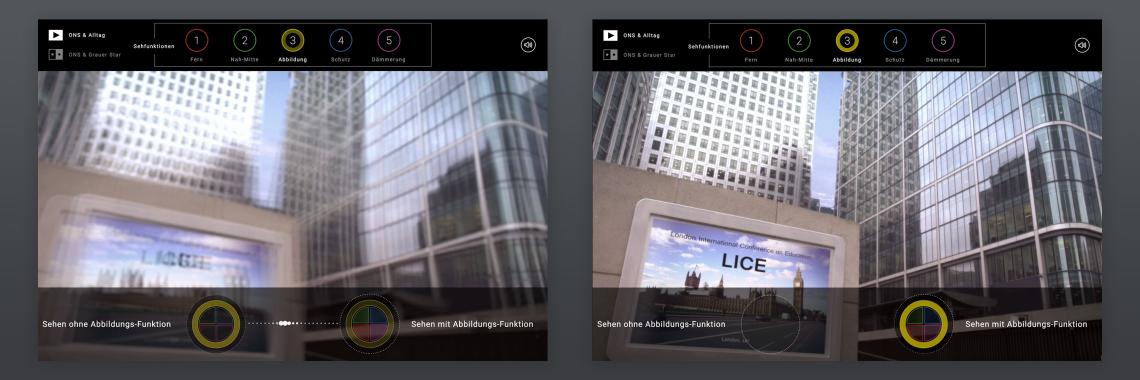

In five short video sequences, we show which functions the natural eye lens fulfills for our vision. You can—and have to!—try out each function yourself by slowly moving a slider from left to right.

A businessman travels to London by car, goes sightseeing, attends a conference and ends the evening on the banks of the River Thames to the atmospheric sounds of a cello. A businessman travels to London by car, goes sightseeing, attends a conference and ends the evening on the banks of the River Thames to the sounds of a cello. At each of these narrative stations, a single factor of his vision is corrected—playfully, by the users of the web application, who facilitate it by moving a lens with the mouse cursor.

For example, vision distortion is corrected in front of the Conference Center and night vision is restored on the dark banks of the Thames in the evening—so that we can not only hear but also see the cellist sitting on the bench, who provides the atmospheric promenade soundtrack.

🤯 Behind-the-Scenes facts

🎬 The videos for the web application were shot on location in London, with a professional director and a cameraman.

🌒 We shot the video footage of the banks of the Thames during the day, and digitally turned day into night in post-production to visualize the night-sight function. To make this look realistic, we digitally modeled the riverside lanterns to really showcase the lighting coming from them.

🧑💻 With the right combination of digital tools and knowledge about the structure of the human eye, human visual blur can be realistically recreated in a still image. In a video, however, this was a special challenge that we solved with dedicated tools programmed by us for this purpose—AfterEffects could not do this—and an integrated technical approach.

⁉️ Too much attention to detail? No! Explicit *need* for detail.

Immersion is fragile. If a movie looks unrealistic, viewers quickly lose interest and switch it off. That's why it's particularly important to depict details such as blurring and lighting authentically—and Rüdiger Dworschak and Sina Mostafawy know this very well.

🤝 That's why RMH MEDIA and 1stQ are a perfect match, working together to produce innovative solutions at the intersection of science communication, marketing, technology and aesthetics.

We look forward to presenting more examples of our collaboration in the coming weeks!

Find the web application here:

Were you inspired by this use case?

We are always happy to answer questions about the projects and would love your feedback. Let's talk about communication solutions for your individual content strategies.